Hello,

When using objects as sources it would be very useful to normalize the power to the surface area of the object. A good example of where this is useful is for blackbody sources where the intensity per surface area is well known.

Internally Zemax must be calculating the surface area when doing the raytrace in order to distribute the rays evenly.

Is it possible to have access to this information?

Has someone already created a macro to do this for generic objects?

thanks,

Ross

Solved

Normalizing illumination using source objects.

Best answer by Sven.Stöttinger

Hi Ross,

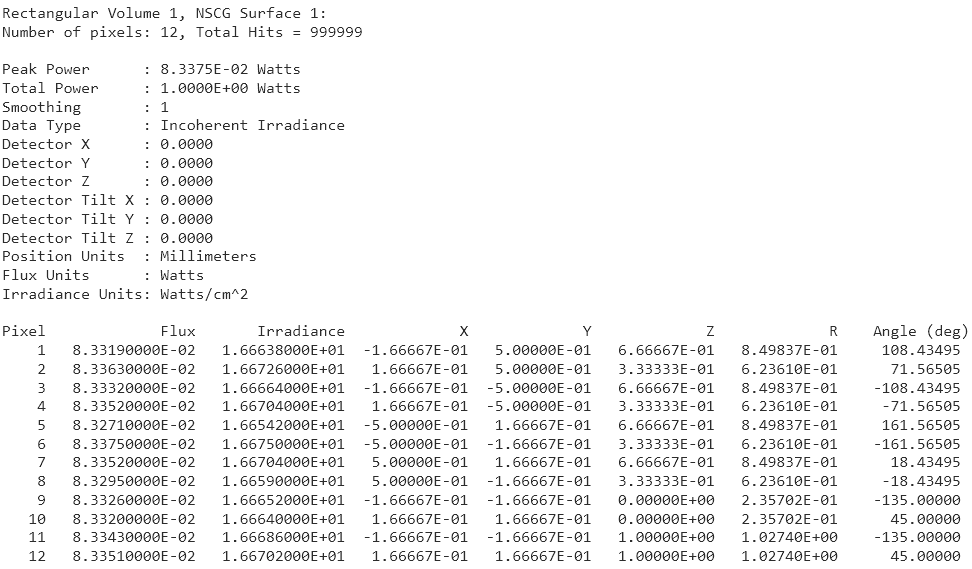

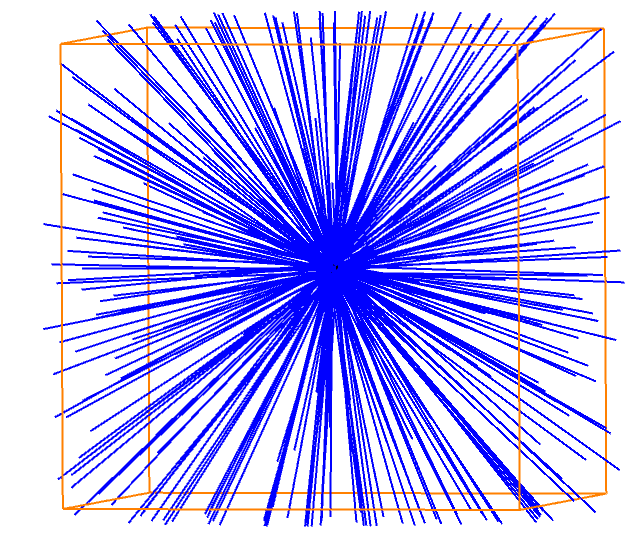

this is a bit of a work-around, but one can get to the total surface area of a volume object by using it as a detector first. In the text detector viewer the flux and irradiance of the objects sub-pixels are stated. In this simple case I used a rectangular volume with a surface area of 6 mm2 with a small rectangular volume source placed within the object. You can calculate the area of each pixel triangle with the flux and irradiance data and sum it up to the total surface area.

Hope this helps out.

Best regards,

Sven

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.