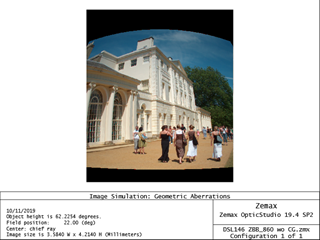

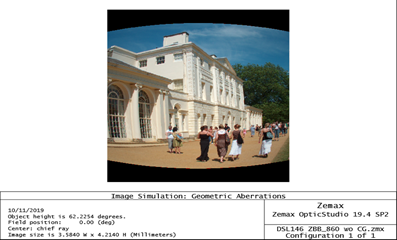

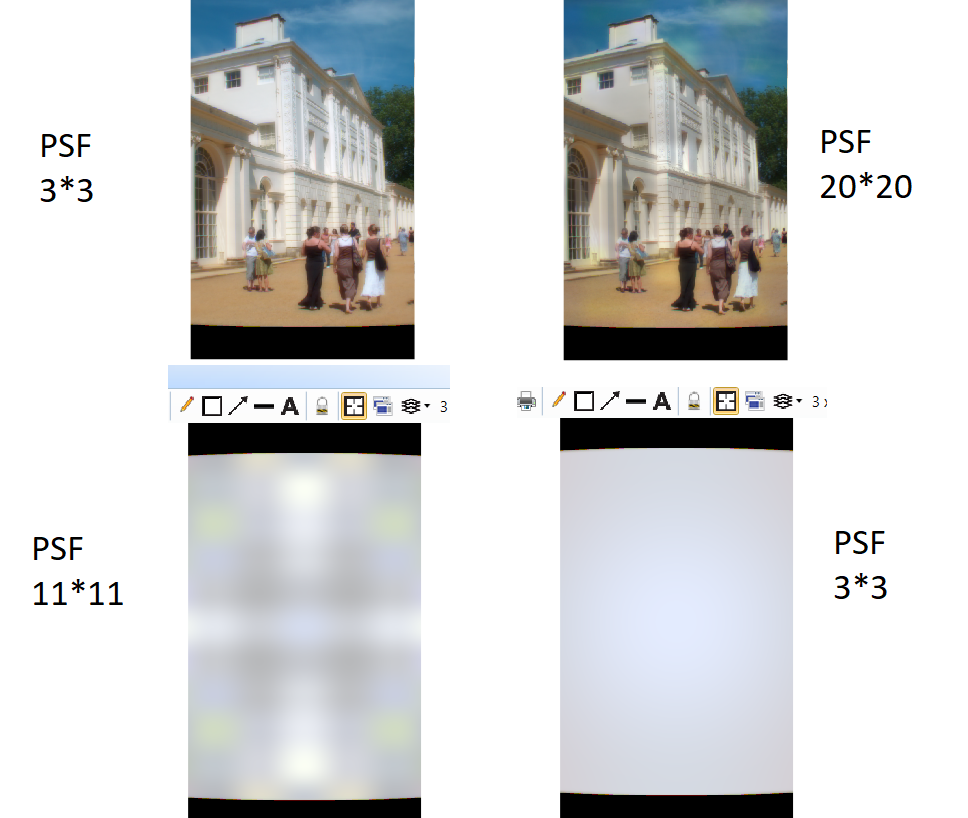

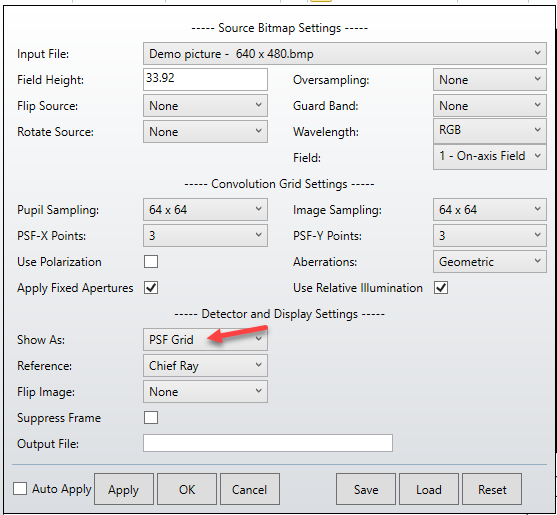

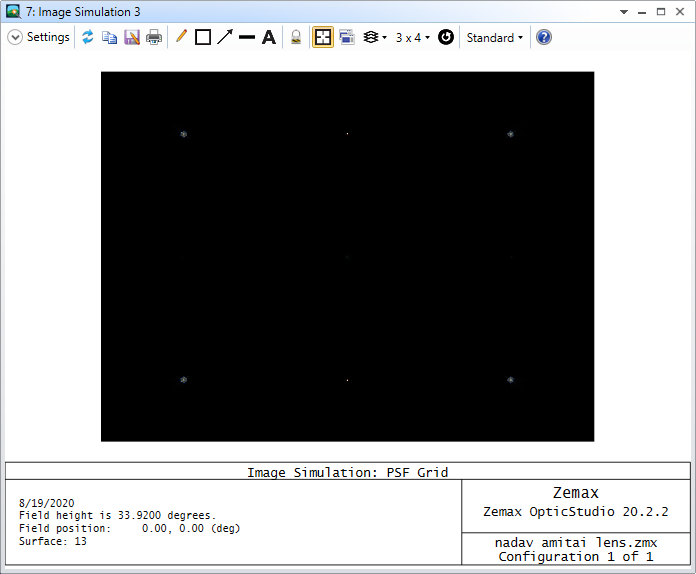

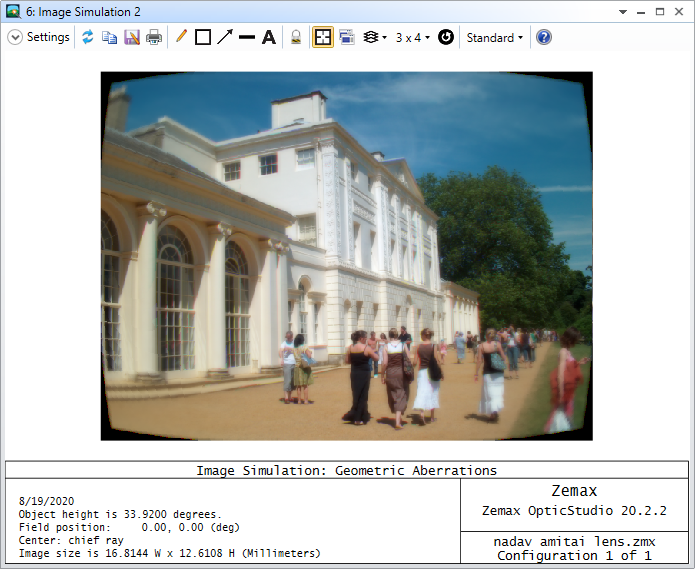

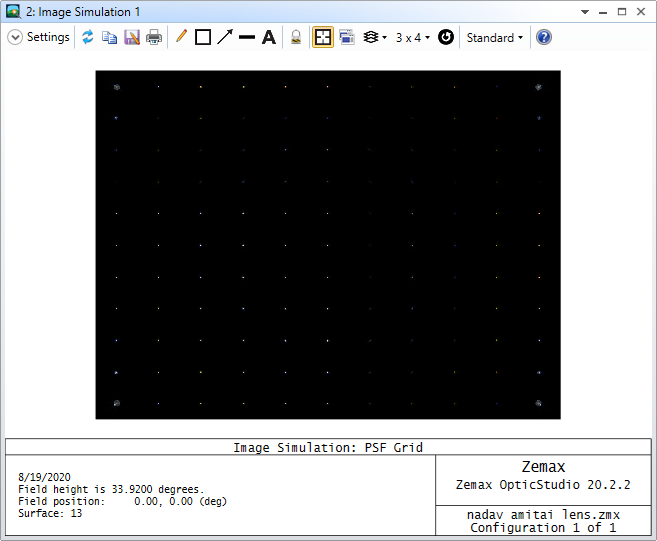

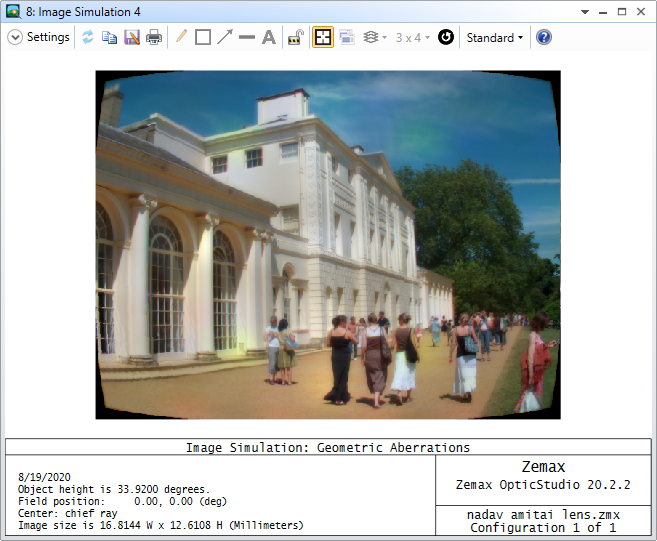

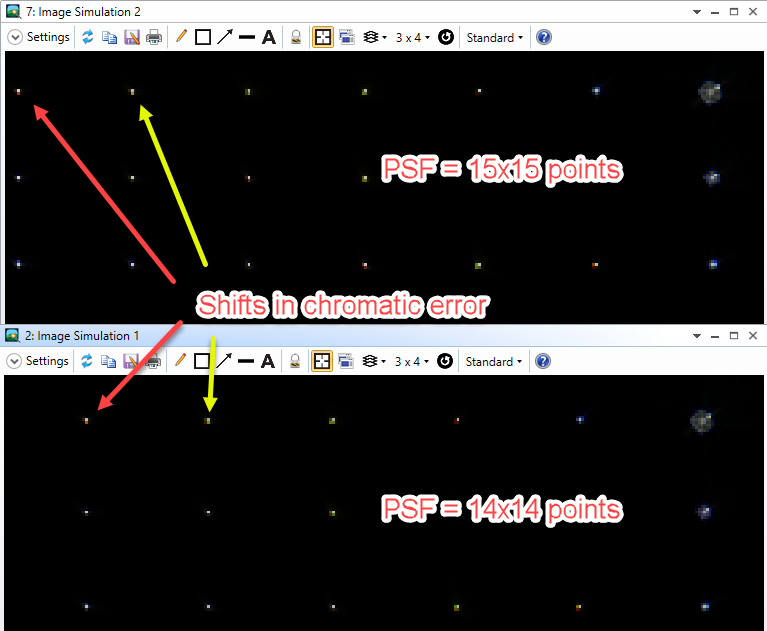

Image Simulation is an extremely powerful tool to generate a simulated final image for evaluating the imaging quality of a lens system. Due to its complex nature, it's not easy to get all the settings correct. In this talk let's dive into what’s happening behind the screen and the correct way of simulating a proper image.

Reply

Rich Text Editor, editor1

Editor toolbars

Press ALT 0 for help

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.