Dear all,

I am performing a tolerance analysis in combination with Physical Optics Propagation, and I am noticing a computational time that is a lot longer than expected.

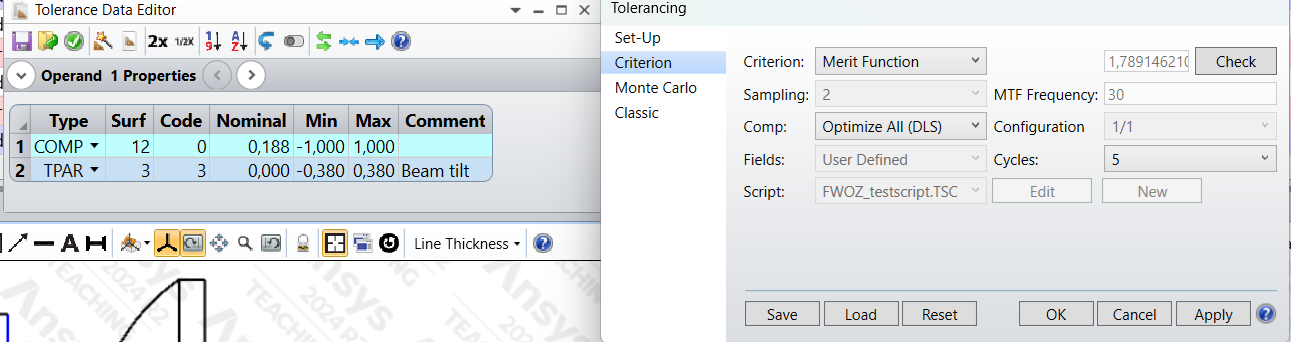

More specifically, I am doing a sensitivity analysis using the merit function as the criterion, and with a thickness compensator (COMP) using 5 optimization cycles. The merit function is the fiber coupling efficiency calculated using POP. The system itself is quite simple, with a single lens to perform the fiber coupling. The file is found in attachment.

To investigate the very long computational time, I compared the sensitivity analysis of a single operand both when done manually, and when done using the tolerance tool.

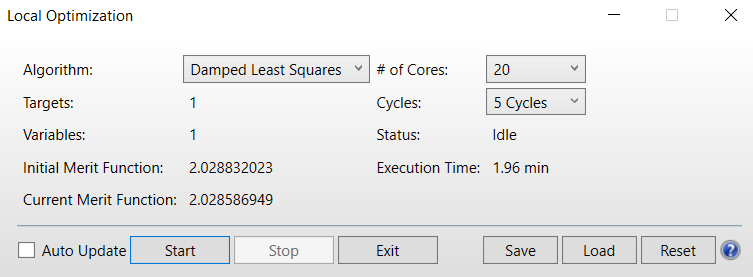

Manually, I changed the value of the operand and then ran the optimizer, taking around 2 minutes:

Using the tolerance tool, I added the compensator and single operand in the tolerance data editor, and ran the tolerancing tool, which took around 12 minutes on a multi-core processor. This is more than double the time I would expect (2 minutes for the nominal value, and 2 minutes for both min and max tolerances):

Does anyone know what is causing this abnormally long computational time using the tolerance tool? Am I doing something wrong or is something going on which I'm not seeing? I do not know what else the sensitivity analysis can be doing differently from the manual approach… When adding multiple tolerance operands (for which doing them all manually is not feasible anymore), the same was observed with even longer tolerance times…

Any feedback would be appreciated!

Best regards,

Indy