Hi,

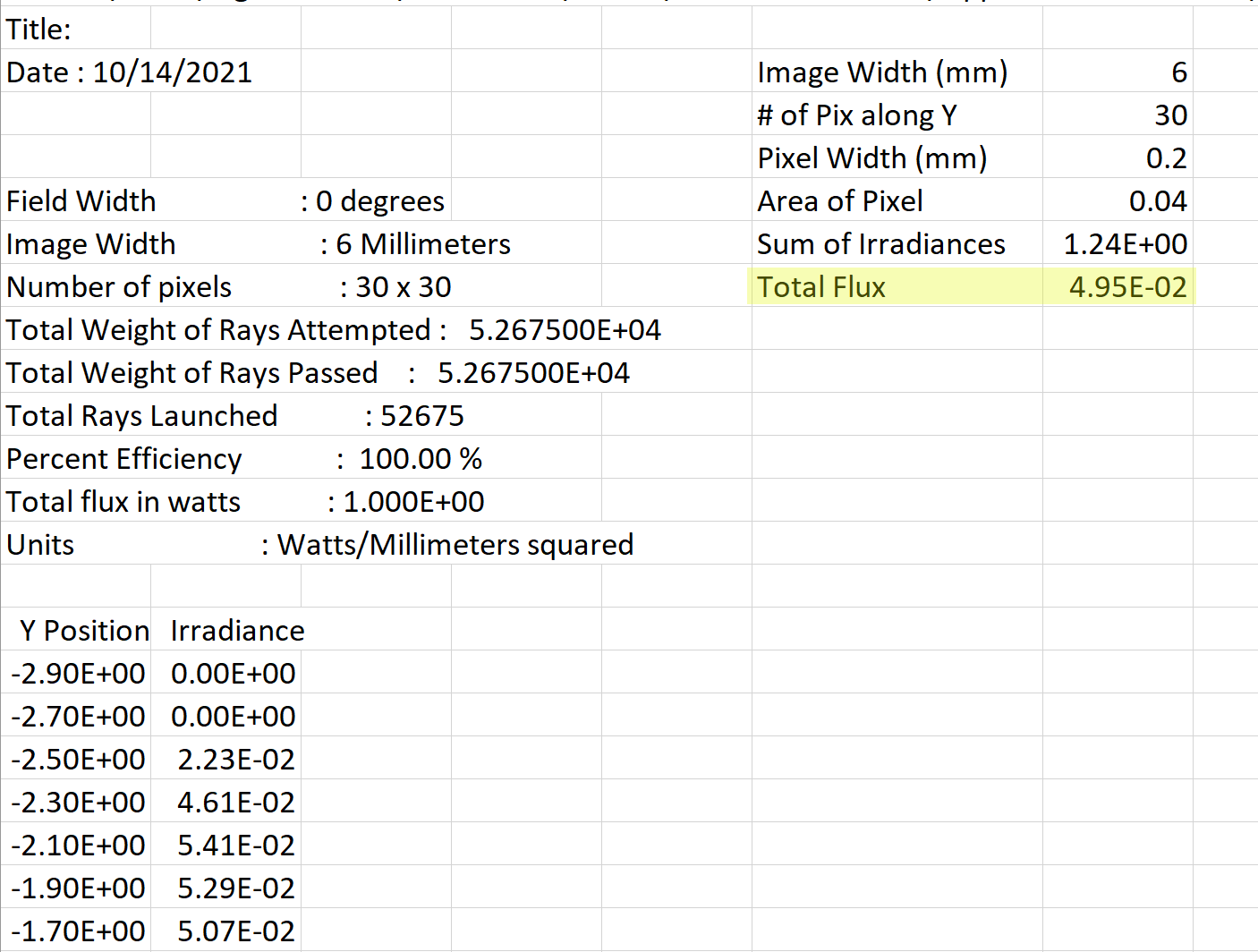

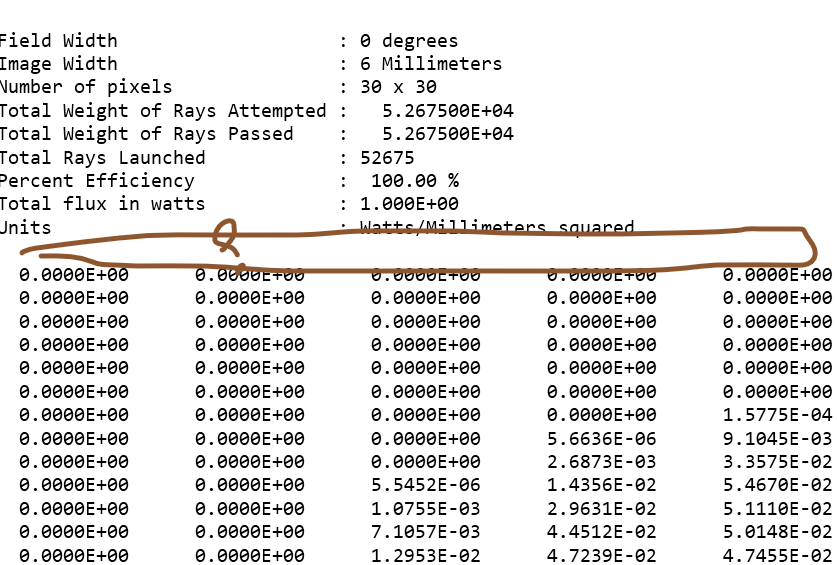

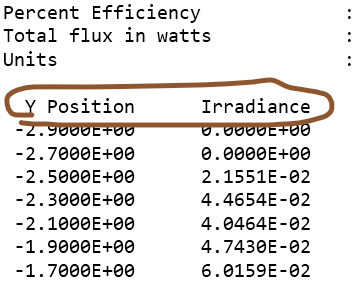

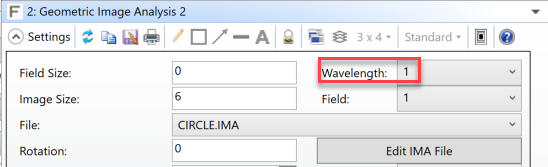

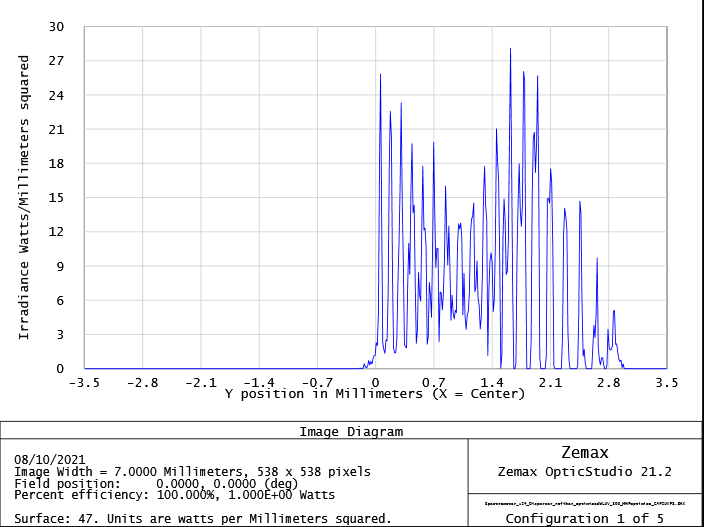

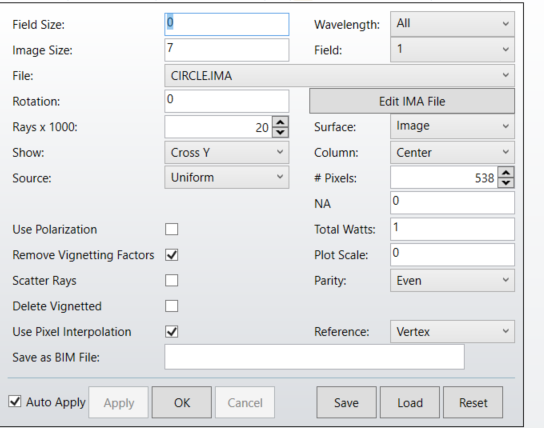

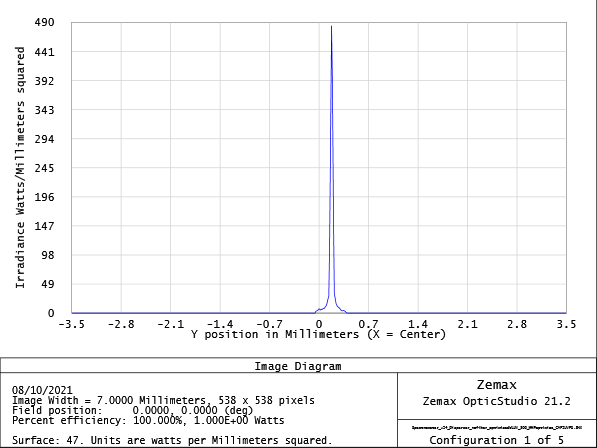

Something does not addup in the calculation of power and efficiency in my spectrometer setup. I have a following reported Irradiance, using 7pixels and 538 pixels( equivalent to 13um pixel size). I used the Total Watts of 1 and I used wavelength input of 255 to 345nm with 5nm apart, which all has the weight of 1.

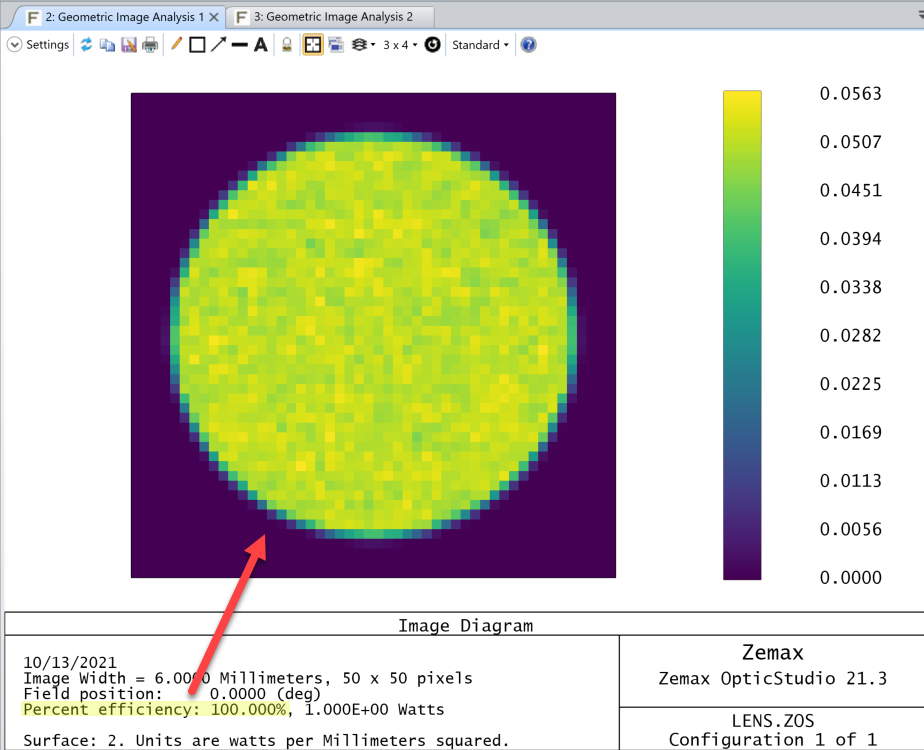

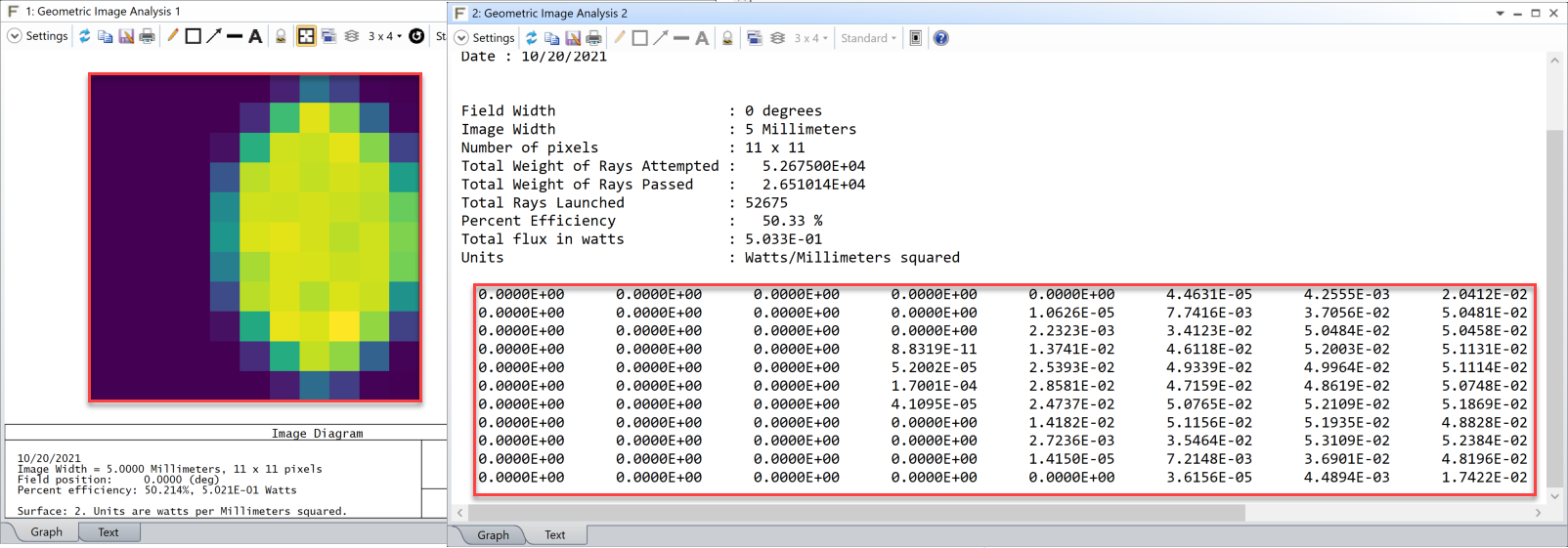

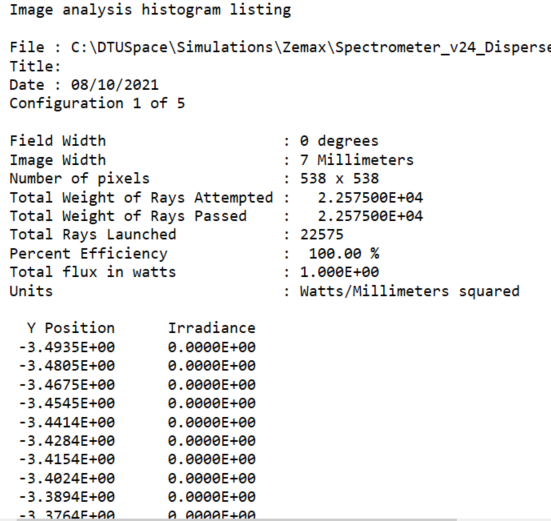

The total efficiency is reported to be 100%. This means 1Watt input should correspond to 1Watt output on the detector to my understanding. However, when I take the numerical data

After adding all the Irradiance together and multiply them by pixel size (13*13*1e-4), I get only 0.35 which is far from 1Watt. How does that work?

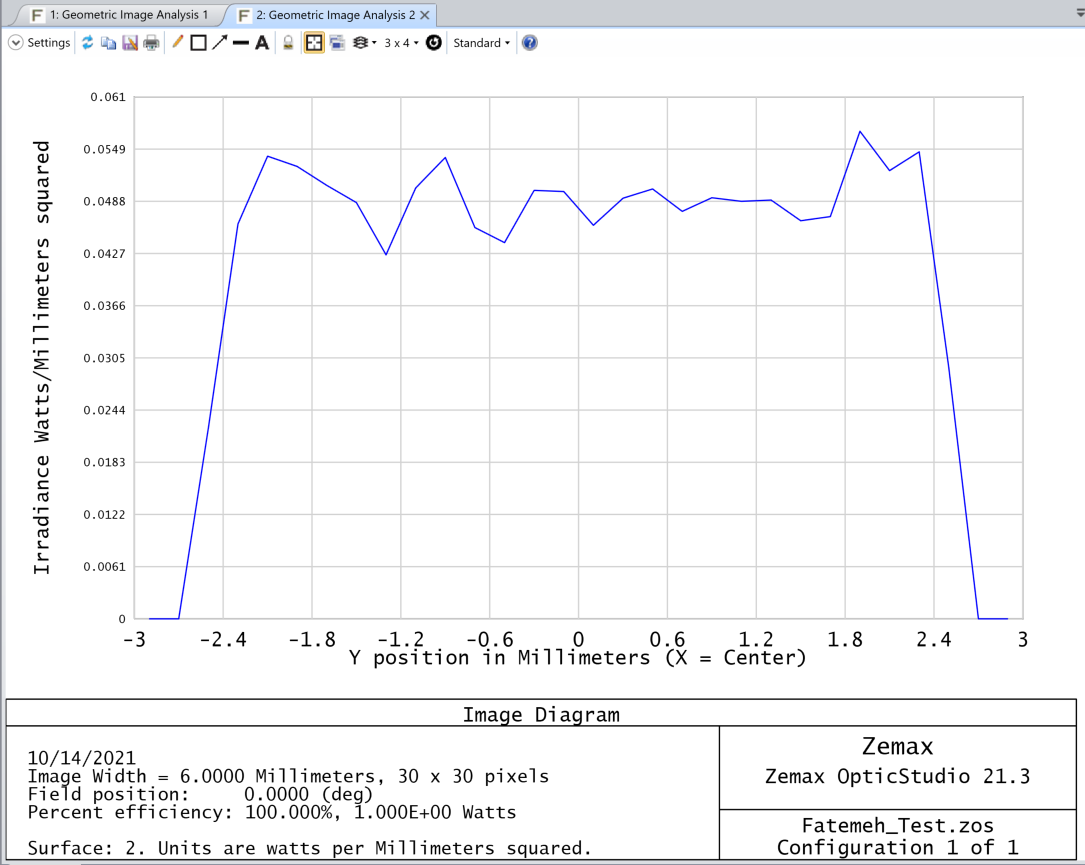

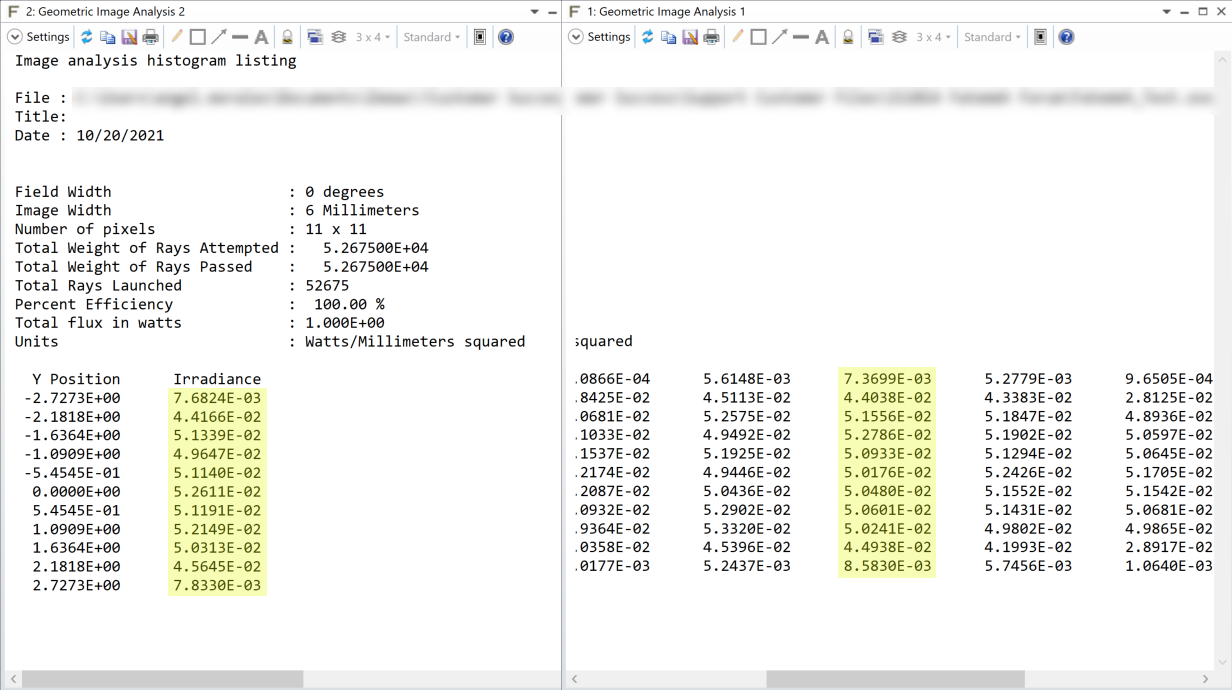

Additionaly I have looked at the result for each wavelengths seperately with 1 Watt as total Watts.

I added all the raddiance values over the y-position for each wavelength individualy and multiply that with pixel size (13*13*1e-4). I added at the end all the results together to cover for all the wavelengths, this gave me 5.5Watts. Therefore, I donot understand the 100% efficiency here and why I have more than 1Watt.

Thank you

Fatemeh