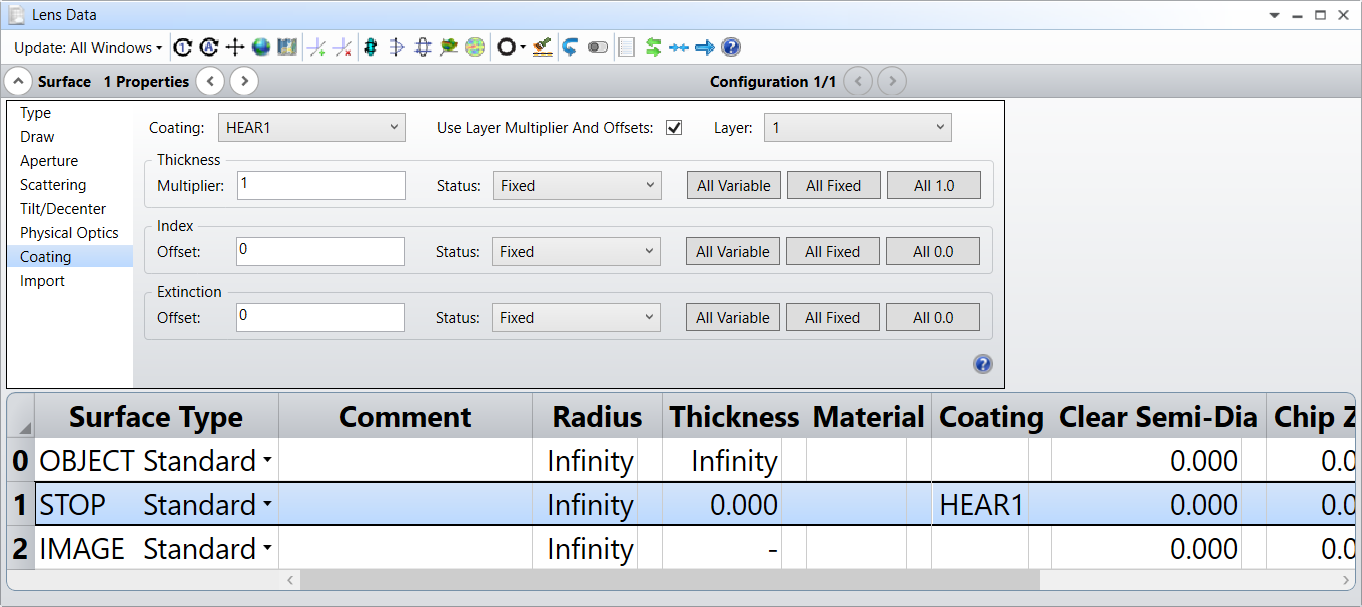

I have a layered coating that I made a coating file for. It is defined through material index vs wavelength and defining the layers, material, and thickness for each layer. Currently, I am using a tolerance analysis to see the effects of variation of the thickness and index of said coating, and am seeing nearly no sensitivity to changes in layer thickness or index, even with high orders of magnitude of change to thickness. This is not reflective of the real-world system data that currently exists.

Is there a good place to start in debugging this? I'm assuming ther has to be an error in the analysis somewhere.