Hello everyone,

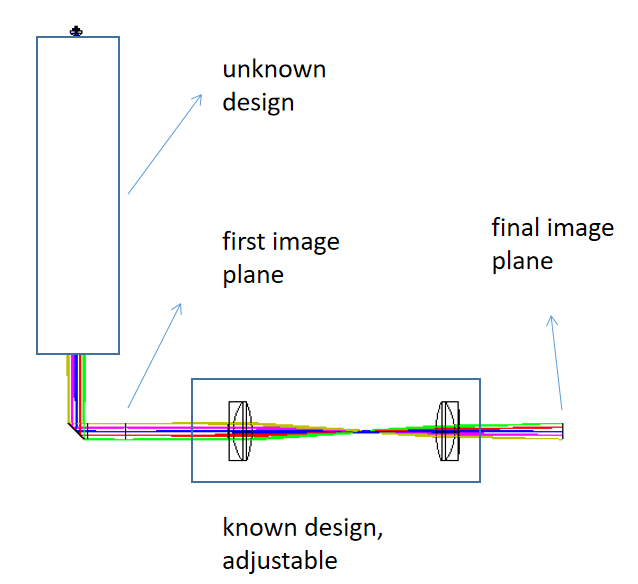

I’ve been racking my brain to try to figure out the image aberration balance method with unknown lens design data method, which is

the camera is put at the final image plane, the current final image is not so good. the problem is the there is an unknown design in this optics train, so I can neither adjust nor redesign the latter components to balance the final image aberration. I come up with a method which is: i put an image aberration sensor at the first image plane, the sensor would read out the first image aberration in terms of Zernike coefficients, then I start a new design, i put a dummy surface which contains the first image aberration in terms of Zernike coefficients at the first image plane position. and then I can adjust or redesign the latter components in this optical train. Do this method make sense?