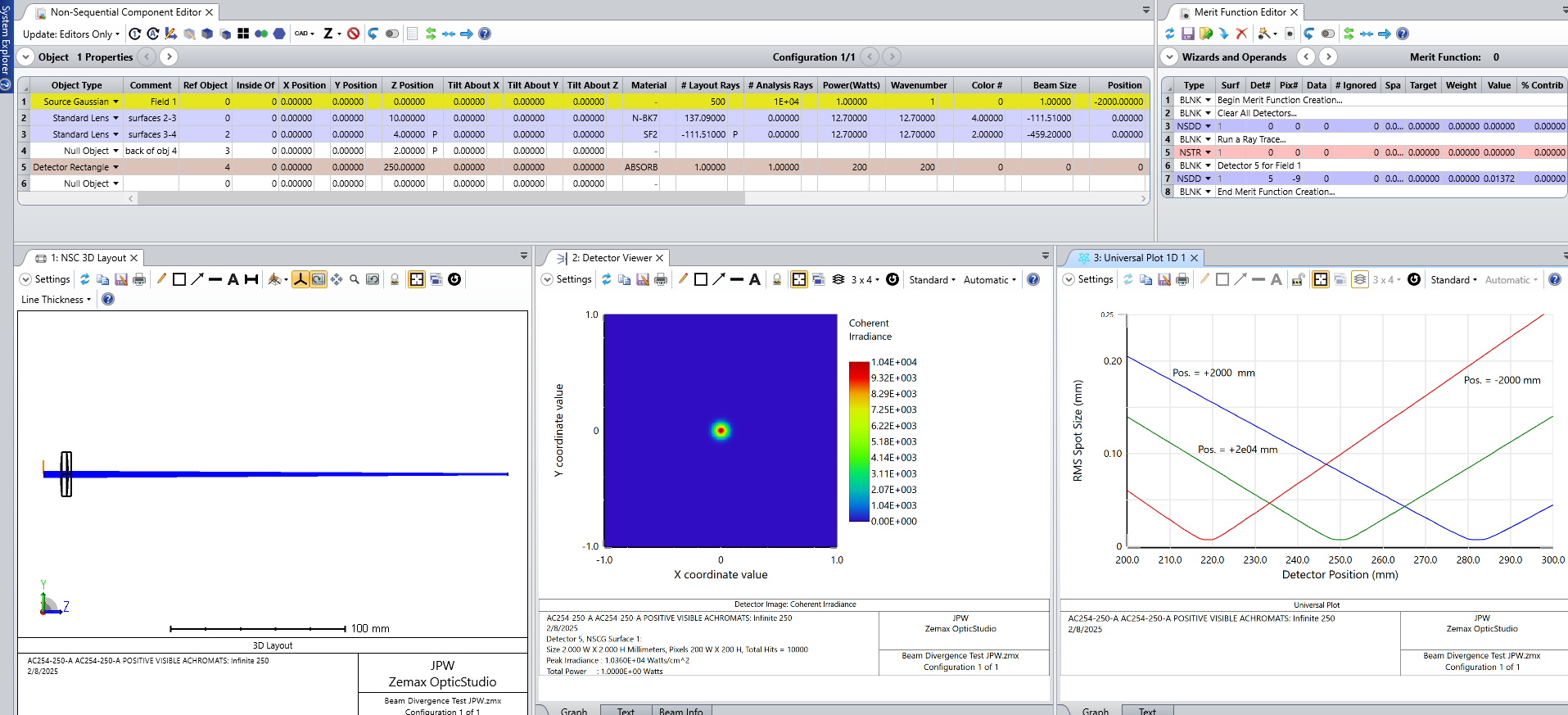

I have setup a simple experiment in non-sequential mode to test the effect of beam divergence on focal position. The lens file I am using is from the Thorlabs website (f=250 mm, VIS achromat). I set the divergence value (or collimation) using a Gaussian Source with the appropriate beam size and position (P) values, and then scan a detector rectangle along the optical axis measuring the beam waist a various locations. Contrary to my knowledge of geometric optics, the focal position shifts closer to the focusing lens with increasing beam divergence; I am using divergence angles of 0.04-0.08 mrads (half angle). Interestingly, when I set the Position value to give a converging beam, the focal position shifts away from the focusing lens. I’m concerned that there is something that I have missed when constructing this model, and was wondering if anyone could help? I have uploaded my Zemax file for convenience. Many thanks, Chris.

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.