Dear All,

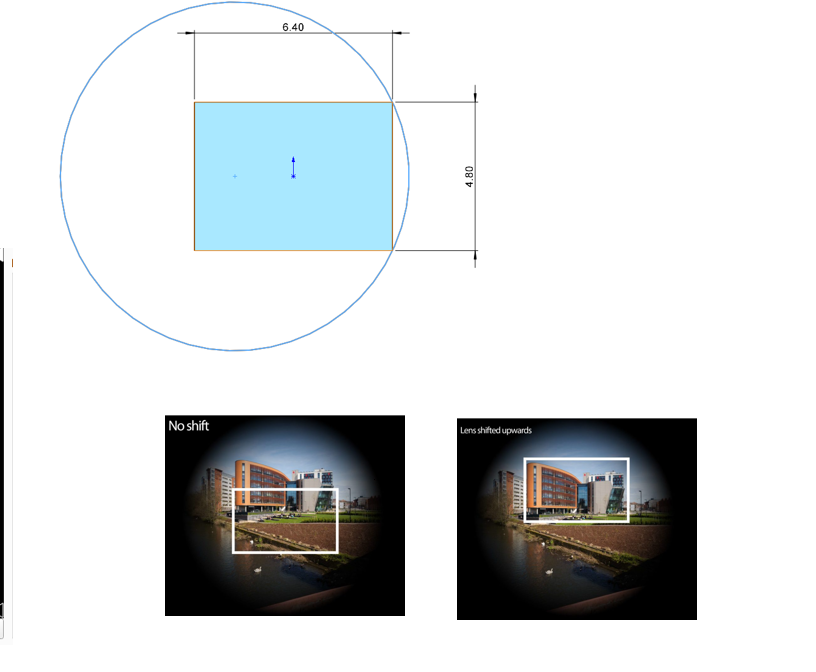

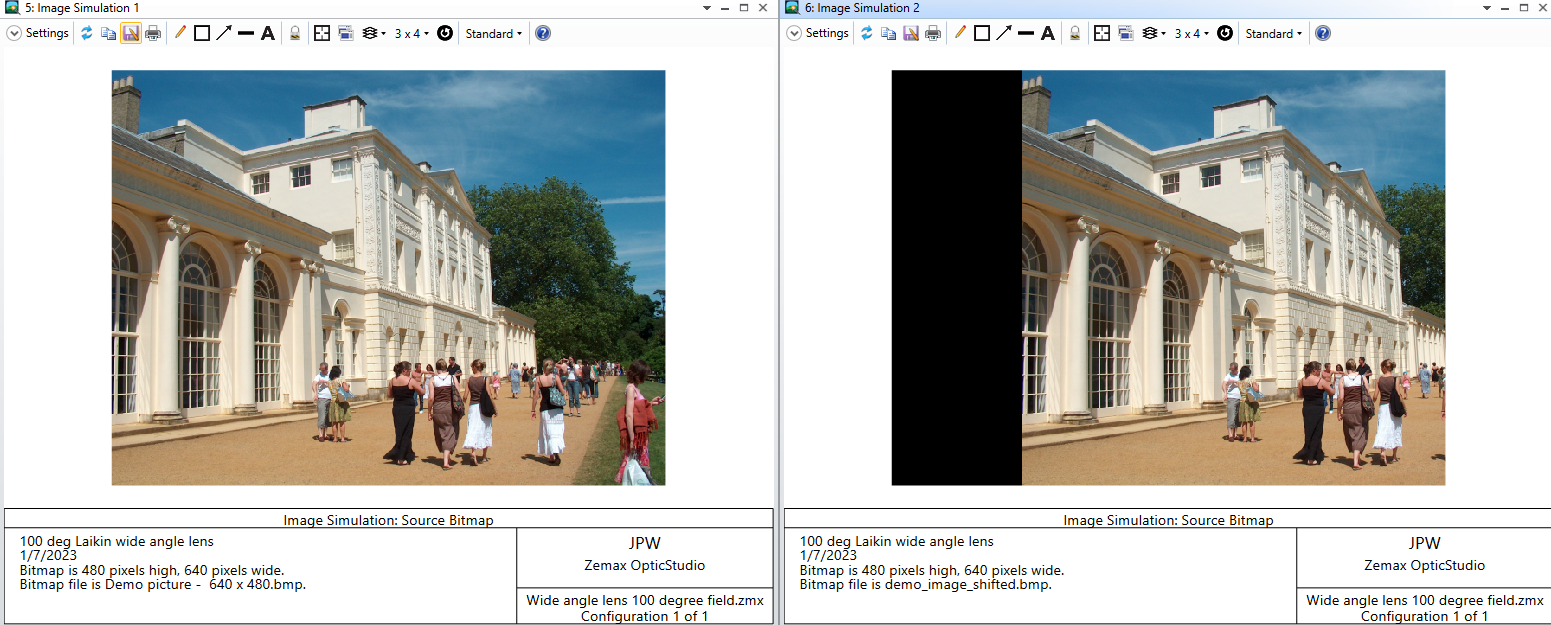

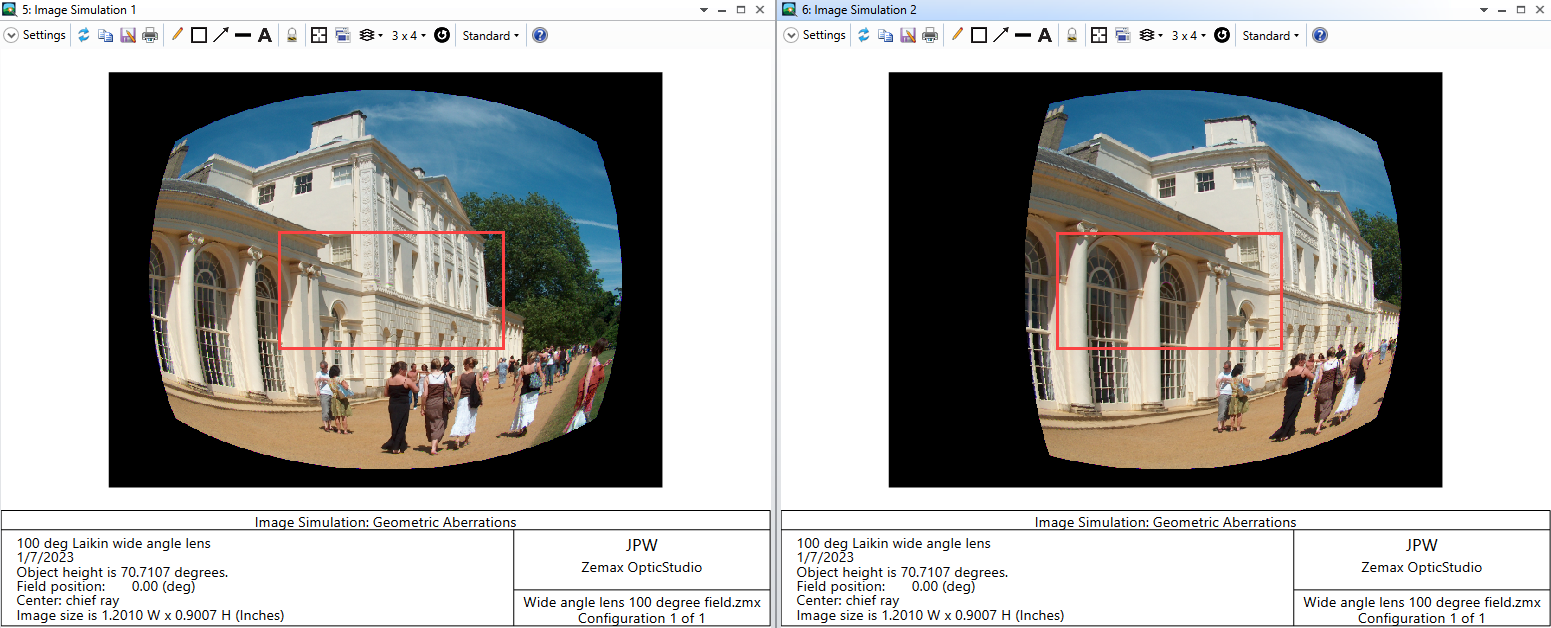

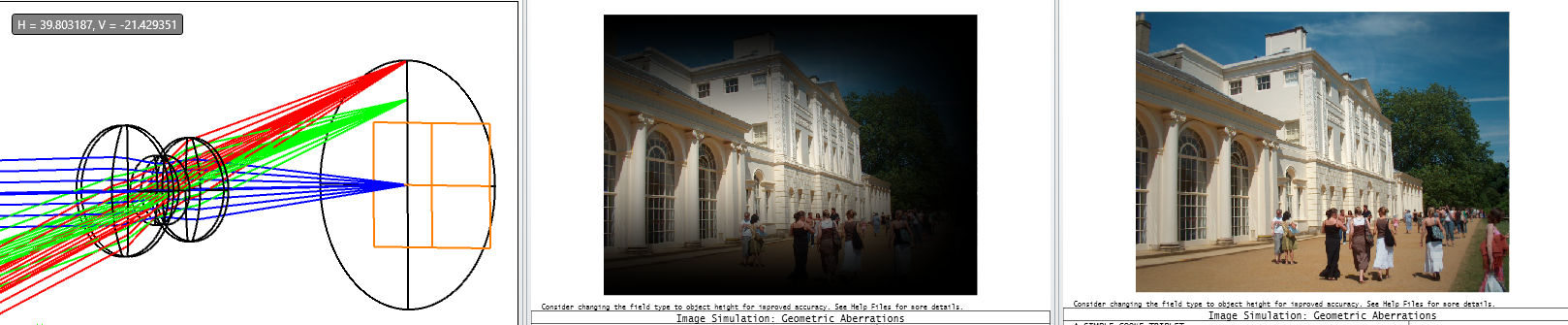

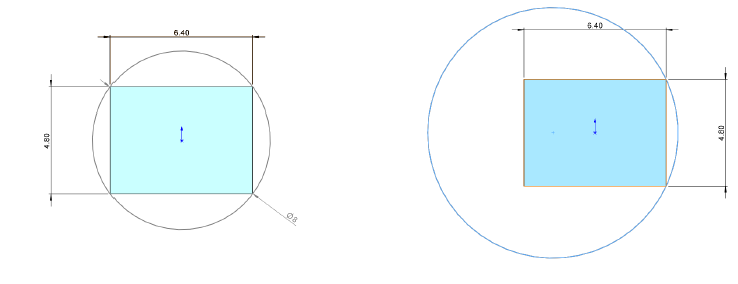

I have a situation here, where I have to shift the lens (image circle) with respect to image sensor/plane, and then visualize the output with image simulation. The image circle should be larger than the imager itself such that no pixels are lost. As an example, the normal vs the shift with larger image circle is shown.

As shown below again, when the lens is shifted a different FOV is captured by the Sensor. This is what I want to simulate and then compare it with the unshifted position. How to achieve that? Simple Decentring the image plane doesn’t work, maybe i did something wrong? Another way could be is to move the lens system together with the field points but it doesn’t work as well, as field points are fixed? Any ideas, how to realize it? Thanks in advance