Hi,

I know a lot of people are like me and obsess about the ‘fastest machine for ray tracing’ and such like, so here’s my latest insights 😁

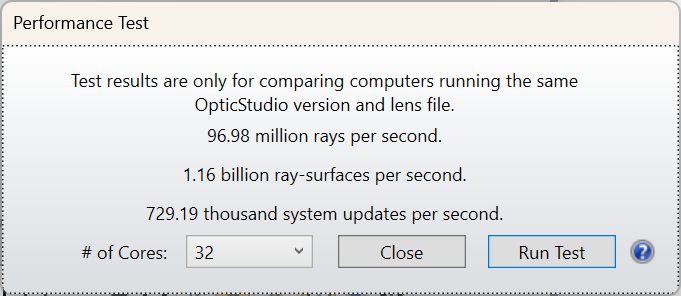

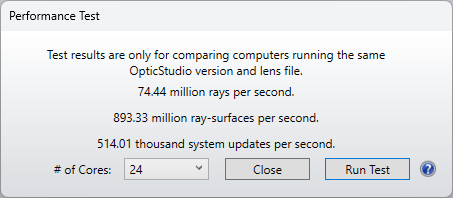

My OpticStudio machine cost ~$2k and is a Dell XPS 8960 from Costco that uses the 13th Gen i7. It shows as having 24 cores, although I don’t know if these are real cores or include ‘hyperthreaded’ cores. My standard test if the OpticStudio Performance Test, using the double Gauss 28 degree field sample file. Nothing special about this design file, but it’s the one I have used since the days of 386 processors and I’m familiar with how the performance ‘feels’ given a specific set of results from the test. I get

I tend to use the middle number, ray-surfaces per second, as the main proxy for performance, so this is almost 900 million RSS. The System Updates number is single-threaded, so it’s a good proxy for how fast a single core is, versus the multi-threaded RSS calculation. I’m very pleased with this. My older laptop, a Lenovo that cost ~$1.5k from Costco, gave 240million RSS.

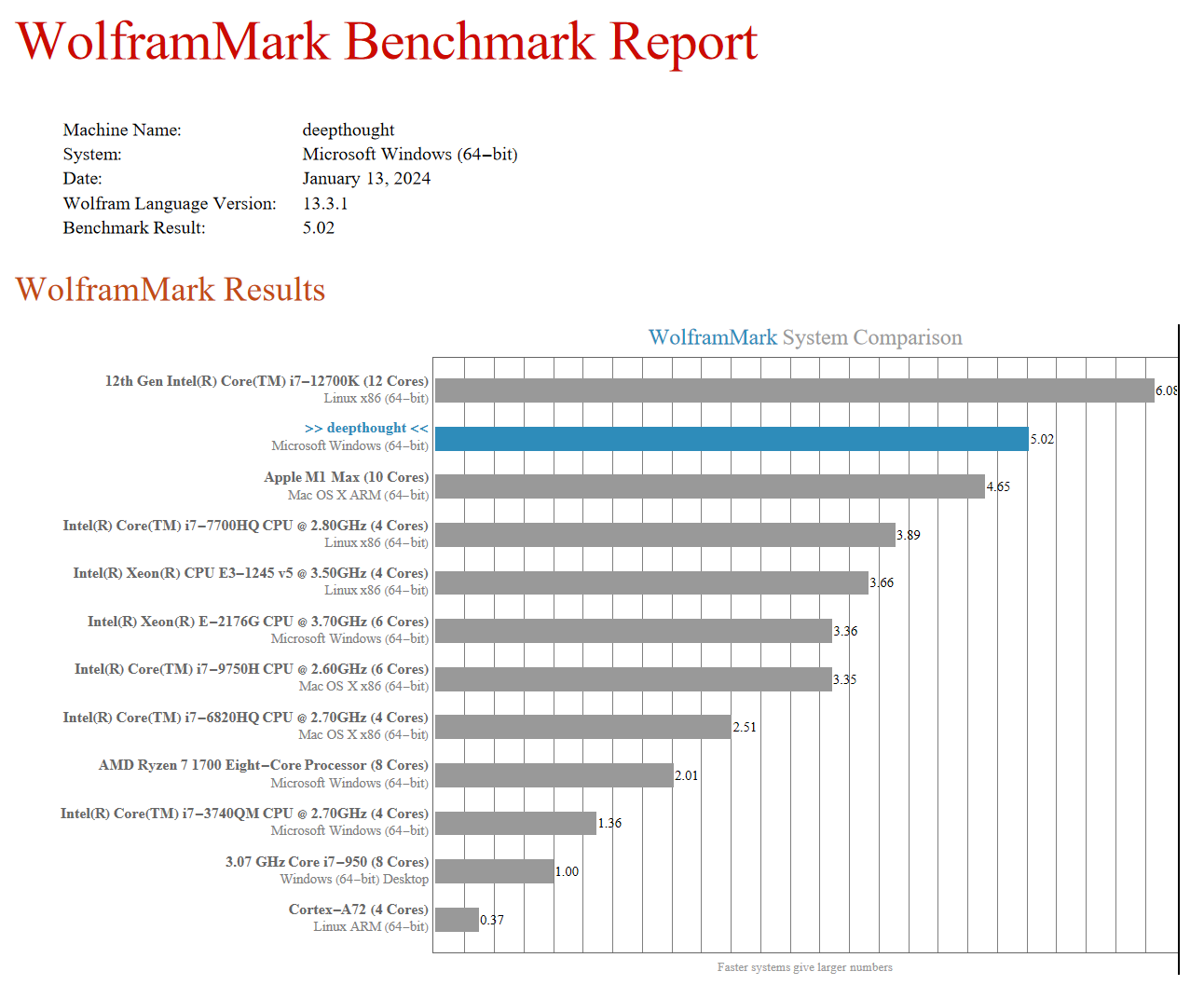

I’ve started using Mathematica, and it comes with its own Benchmark and this gives

This doesn’t mean anything directly in terms of ray tracing, but is a good test of the computational muscle of the machine and again I was pleased at how well my Dell-from-Costco did.

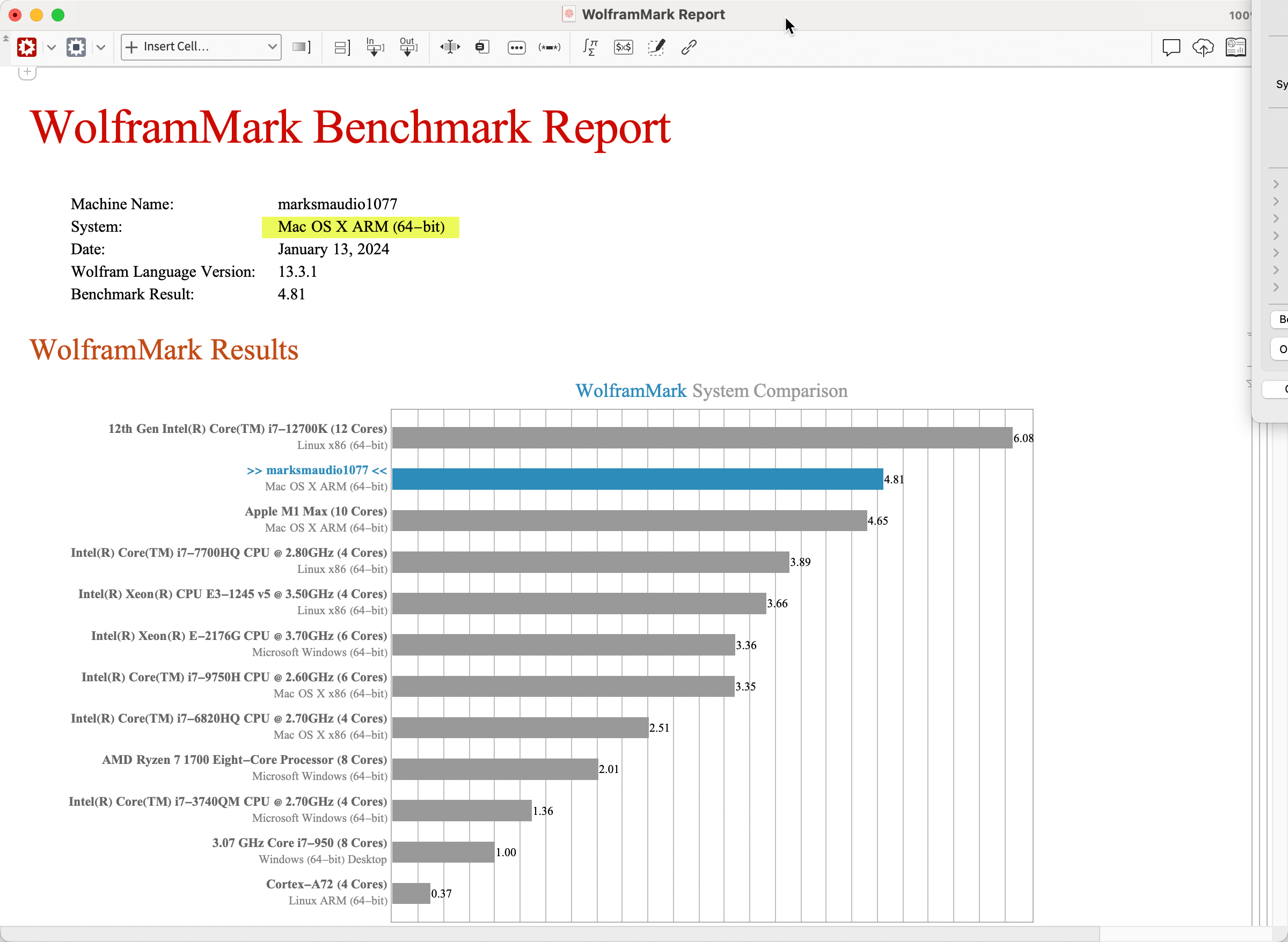

I also have a Mac Studio with M1 processor, and I know there are other peeps like me who would be keen to run OpticStudio on a Mac if a version were available. Fortunately Mathematica is available as a native M1 application, so I ran the test and got:

So the Wolfram benchmark for the M1 machine is 4.81, again very impressive. But my steam Dell gives 5.02, and cost ~$2k versus $3.5k for the Mac (I’m worth it). So it’s likely that any Mac version of OS would run slightly slower on ~1.75 time more expensive hardware. Bad news for Apple fanbois like me. We would, of course, insist on the Mac version being better in so many other, yet indefinable, ways.

One last thing. The Dell comes with an nVidia GeForce RTX 3060 Ti, a reasonably respectable gaming card. Not top end, but still very good. It has little impact on OpticStudio performance, except that the editors are much more responsive. I can’t really tell much difference between the Normal and Express views of the editor. The Express is still the faster of the two, as expected, but it’s a lot less functional for not a lot of perceived user speed. So the advice I’ve been giving for years about the graphics card not being that important as long as it supports DX11 (which they all do now) is not as true as it once was. It does seem a better graphics card has more impact on the Normal view of the editors. No discernible difference for Analysis window graphics, or drawing speed of the layouts however.

- Mark