This thread is dedicated to the upcoming webinar: Modeling Flash Lidar in OpticStudio, Part 2: Lidar Simulation in Non-Sequential Mode. Any questions received during the webinar will be responded to as a reply on this thread. Feel free to post your own questions! The speaker will be notified and will respond as long as the thread is still open.

Be sure to subscribe to this thread if you want to see additional discussion regarding this webinar topic. The thread will be open to new replies for a limited time following the event.

[The webinar has concluded]

Webinar details

Date: Thursday, August 18th

Time: 6:00 - 6:45 AM PDT | 11:00 - 11:45 AM PDT

Presenter: Angel Morales, Senior Application Engineer at Ansys Zemax

Abstract:

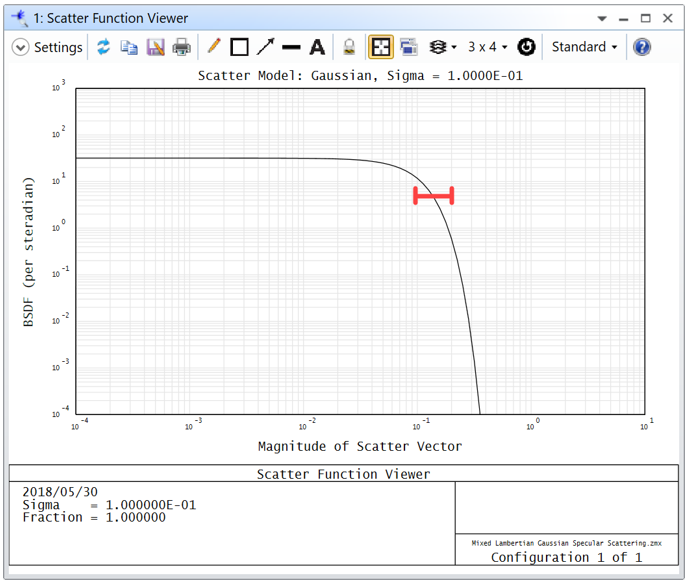

In the consumer electronics space, engineers leverage lidar for several functions, such as facial recognition and 3D mapping. While vastly different embodiments of lidar systems exist, a flash lidar solution generates an array of detectable points across a target scene using solid-state optical elements. This technology enables compact packaging, and it has allowed detailed spatial data acquisition to be more commonplace in consumer products, like smart phones and tablets. In part two of this webinar series, we will cover the conversion of our sequential starting points from part one and incorporate additional detail into the non-sequential model. We also apply the ZOS-API to generate time-of-flight results with our flash lidar system.